Welcome to the fourth MaRDI Newsletter! This time we will investigate the fourth and final FAIR principle: Reusability. We consider the R in FAIR to capture the ultimate aim of sustainable and efficient handling of research data, that is to make your digital maths objects reusable for others and to reuse their results in order to advance science. In the words of the scientific computing community, we want mathematics to stand on the shoulders of giants rather than to be building on quicksand.

licensed under CC BY-NC-SA 4.0.

To achieve this, we need to make sure every tiny piece in a chain of results is where it should be, seamlessly links to its predecessors and subsequent results, is true and is allowed to be embedded in the puzzle we try to solve. This last comment is crucial, so we dedicate our main article in this issue of the newsletter to the topic of documentation, verifiability, licenses, and community standards for mathematical research data. We also feature some nice pure-maths examples we made for the love data week, report on the first MaRDI workshop for researchers in theoretical fields who are new to FAIR research data management, and entertain you with surveys and news from the world of research data.

To get into the mood of the topic, here is a question for you:

If you need to (re)use research data you created some time ago, how much time would you need to find and understand it? Would you have the data at your fingertips, or would you have to search for it for several days?

You will be taken to the results page automatically after submitting your answer, where you can find out how long other researchers would take. Additionally, the current results can be accessed here.

On the shoulders of giants

The famous quote from Newton: “If I have seen further, it is by standing on the shoulders of giants" usually refers to how science is built on top of previous knowledge, with researchers basing their results on the works of scientists who came before them. One could reframe it by saying that scientific knowledge is reusable. This is a fundamental principle in the scientific community: once a result is published, anyone can read it, learn how it was achieved, and then use it as a basis for further research. Reusing knowledge is also ingrained in the practice of scientific research as the basis of verifiability. In natural sciences, the scientific method demands that experimental data back your claims. In mathematical research, the logic construction demands mathematical proof of your claims. This means that for a good scientific practice, your results must be verifiable by other researchers, and this verification requires a reuse of not only the mental processes but also the data and tools used in the research.

Research data must be as reusable as the results and publications they support. From the perspective of modern, intensively data-driven science, this demand poses some challenges. Some barriers to reusability are technical, because of incompatibilities of standards or systems, and this problem is largely covered in the Interoperability principle of FAIR. But other problems such as poor documentation or legal barriers can be even bigger obstacles than technical inconveniences.

Reuse of research data is the ultimate goal of FAIR principles. The first three principles (Findable, Accessible, Interoperable) are necessary conditions for effective reuse of data. What we list here as “Reusability” requirements are all the remaining conditions, often more subjective or harder to evaluate, that appeal to the final goal of having a piece of research data embedded in a new chain of results.

To be precise, the Reusability principle requires data and metadata to be richly characterised with descriptors and attributes. Anyone potentially interested in reusing the data should easily find out if that data is useful for their purposes, how it can be used, how it was obtained, and any other practical concerns for reusing it. In particular, data and metadata should be:

- associated with detailed provenance

- released with a clear and accessible data usage license.

- broadly aligned with agreed community standards of its discipline.

Documentation

It is essential for researchers to acknowledge that the research data they generate is a first-class output of their scientific research and not only a private sandbox that helps them produce some public results. Hence, research data needs to be curated with reusability in mind, documenting all details (even some that might seem irrelevant or trivial to its authors) related to its source, scope, or use. In data management, we use the term “provenance” to describe the story and rationale behind that data. Why does it exist, what problem was it addressing, how it was gathered, transformed, stored, used… all this information might be relevant for a third party that first encounters the data and has to judge if it is relevant for themselves or not.

In experimental data, it is important to document exactly what was the purpose of the experiment, which protocol was followed to gather the data, who did the fieldwork (in case that contact information is needed), which variables were recorded, how the data is organized, which software was used, which version of the dataset it is, etc. As an antithesis of the ideal situation, imagine that you, as a researcher, find out about an article that uses some statistical data that you think you could reuse or that you want to look at as a referee. The data is easily available, and it is in a format that you can read. The data, however, is confusing. The fields on the tables have cryptic names such as “rgt5” and “avgB” that are not defined anywhere, leaving you to guess their meaning. Units of the measures are missing. Some registries are marked as “invalid” without any explanation of the reason and without making clear whether those registries were used or not on calculations. Derived data is calculated from a formula, but the implementation in the spreadsheet is slightly but significantly different than the formula in the article. If you re-run the code, the results are thus a bit different from those stated in the article. At some point, you try to contact the authors, but the contact data is outdated, or it is unclear who of several authors can help with the data (you can picture such a scene in this animated short video). Note that in this scenario we describe, the research data might have been perfectly Findable, Accessible and technically good and Interoperable, but without attention to those Reusable requirements, the whole purpose of FAIR data is defeated.

In computer-code data, documentation and good community development practices are non-trivial issues the industry has been addressing for a long time. Communities of programmers concerned by these problems have developed tools and protocols that solve, mitigate, or help manage these issues. Ideally, scientists working on scientific computing should learn and follow those good practices for code management. For instance, package managers for standard libraries, version control systems, continuous integration schemes, automated testing, etc., are standard techniques in the computer industry. While not using any of these techniques and just releasing source code in zip files might not break F-A-I principles, it will make reuse and community development much more difficult.

Documenting algorithms is especially important. Algorithms frequently use tricks, constants that get hard-coded, code patterns that come from standard recipes, parts that handle exceptional cases… Most often, even a very well-commented code is not enough to understand the algorithm, and a scientific paper is published to explain how the algorithm works. The risk is having a mismatch between the article that explains the algorithm, and the released production-ready code that implements it. If the code implements something similar but not exactly what is described in the article, there is a gap where mistakes can enter. Having a close integration between the paper and the code is crucial to prevent the newcomer from having to rework how the described algorithm translates into code.

Verifiability

As we introduced above, independent verification is a pillar of scientific research, and verification cannot happen without reusability of all necessary research data. MaRDI puts a special effort into enabling verification of data-driven mathematical results, by building FAIR tools and exchange platforms for the fields of computer algebra, numerical analysis, and statistics and machine learning.

An interesting example arises in computer algebra research. In that field, output results are often as valuable by themselves as the program that produced them. For instance, classifications and lists are valuable by themselves (see for example the LMFDB or MathDB sites for some classification projects). Once that list is found, it can be stored and reused for other purposes without any need to revisit the algorithm that produced such a list. Hence, the focus is normally on reusability of the output, but forgetting the reusability of the sources. This neglects to describe the provenance of the data, how it was created, which techniques were used to find it. This entails serious risks. Firstly, it is essential to verify that the list is correct (since a lot of work will be carried out assuming it is). Secondly, it is often the case that later research needs a slight variation of the list offered in the first place, so researchers need to modify parameters or characteristics of the algorithm to create a modified list.

In the case of numerical analysis, the output algorithms are usually focused on user reusability, often in the form of computing packages or libraries. However, several different algorithms may compete for accuracy, speed, hardware requirements, etc., so the “verification” process gets replaced by a series of benchmarks that can rate an algorithm in different categories and verify its performance. We have described, in the previous newsletter, how MaRDI would like to make numerical algorithms easier to reuse and benchmark them in different environments.

As for statistical data, our Interoperability issue of the newsletter describes how MaRDI curates datasets with “ground truths,” known facts that we know for sure independently from the data, that allow for the validation of new statistical tools to be applied to the data. In this case, re-using these new statistical tools on new studies increases the corpus of cases where the tool has been successfully used, making each reuse a part of the validation process.

Licenses

We also discussed licenses in our Accessibility issue. Let’s recall that FAIR principles do not prescribe free / open licenses, although those licenses are the best way to allow unrestricted reusability. However, FAIR principles do require a clear statement of the license that applies, be it restrictive or permissive.

Even within free/open licenses, the choice is wide and tricky. In software, open source licenses (e.g. MIT, Apache licenses) refer to the fact that the source code must be provided to the user. Those are amongst the most permissive because with the code one can study, run, or modify it. In contrast, free software licenses (e.g. GPL) carry some restrictions and an ethical/ideological load. For instance, many free licenses include copyleft, which means that any derived work must keep the same license, effectively preventing a company to bundle this software in a proprietary package that is not free software.

In creative works (texts, images…), the Creative Commons licenses are the standard legal tool to explicitly allow redistribution of works. There are several variants, ranging from almost no restrictions (CC0 / Public domain), to including clauses for attribution (CC-BY, attribution), sharing with the same license (CC-SA, share alike), or restricting commercial use (CC-NC, non-commercial) or derivative works (CC-ND, non-derivative), and any compatible combination. For databases, the Open Database License (ODbL) is a widely used open license, along with CC.

The following diagram shows how you can determine which CC license would be appropriate for you to use:

Diagram on Intellectual Property and Creative Commons by MaRDI,

licensed under CC BY-NC-ND

Attention must be paid that CC-ND is not an open license, and CC-NC is subject to interpretation of the term “non-commercial,” which can pose problems. While CC licenses have been defended in court in many jurisdictions, there are always legal details that can pose issues. For instance, the CC0 license intends to waive all rights over a work, but in some jurisdictions, there are rights (such as authorship recognition) that cannot be refrained. Other details concern the license versions. The latest CC version is 4.0, and it intends to be valid internationally without need to “port” or adapt to each jurisdiction, but each CC version has its own legal text and thus provides slightly different legal protection. Please note that this survey article does not provide legal advice, you can find all the legal text and human-readable text on the CC website.

In general, the best policy for open science is to use the least restrictive license that suits your needs and, with very few exceptions, not to add or remove clauses to modify a license. Reusing and combining content implies that newly generated content needs a license compatible with those of the parts that were used. This can become complicated or impossible the more restrictions they have (for instance, with interpretations of commercial interest or copyleft demands). Also, licenses and user agreements can conflict with other policies, such as data privacy; see an example in the Data Date interview in this newsletter.

Community

Perhaps the most synthetic form of the Reusability principle would be “do as the community does or needs” since it is a goal-focused principle: if the community is re-using and exchanging data successfully, keep those policies; if the community struggles with a certain point, act so that reuse can happen.

MaRDI takes a practical approach to this, studying the interaction between and within the mathematics community and other research communities and the industry. We described this “collaboration with other disciplines” in the last newsletter, and we highlighted the concept of “workflow” as the object of study, that is, the theoretical frameworks, the experimental procedures, the software tools, the mathematical techniques, etc. used by a particular research community. By studying the workflows in concrete focus communities, we expect to significantly increase and improve their reuse of mathematical tools, while also setting methods that will apply to other research communities as well.

MaRDI’s most visible output will be the MaRDI Portal, which will give access to a myriad of ‘FAIR’ resources via federated repositories, organized cohesively in Knowledge Graphs. MaRDI services will not only facilitate reusability of research data to mathematicians and researchers in other fields alike but also be a vivid example of best-practices research life. This portal will be a gigantic endeavor to organize FAIR research data, a giant on whose shoulders tomorrow’s scientists can stand. We strive for MaRDI to establish a new data culture in the mathematical research community and in all disciplines it relates to.

In Conversation with Elisabeth Bergherr

In this episode of Data Dates, Elisabeth and Christiane talk about reusability and the use of licenses in interdisciplinary statistical research, students' thesis, and teaching.

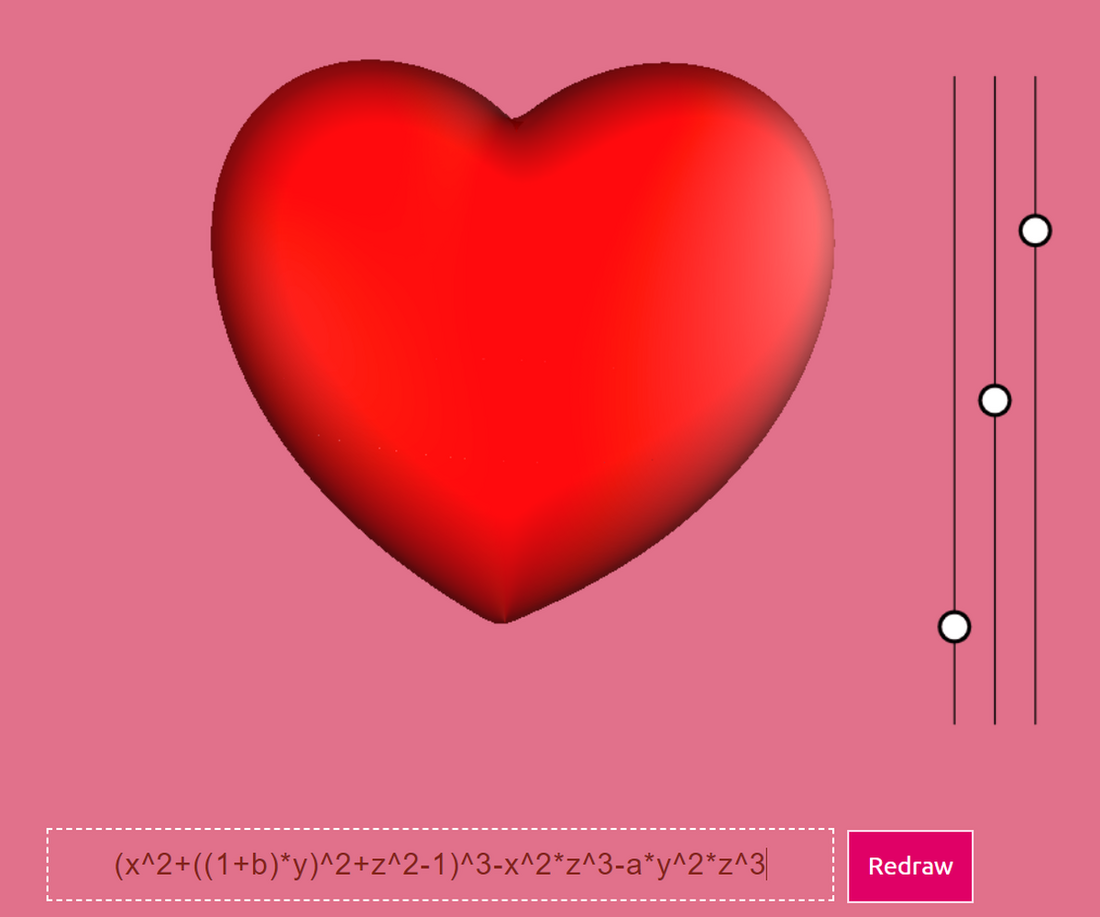

Love Data Week

Love Data Week is an international week of actions to raise awareness for research data and research data management. As part of this initiative, MaRDI created an interactive website that allows you to play around with various mathematical objects and learn interesting facts about their file formats.

Research data in discrete math

Mid March, the MaRDI outreach task area hosted the first research-data workshop for rather theoretical mathematicians in discrete math, geometry, combinatorics, computational algebra, and algebraic geometry. These communities are not covered by MaRDI's topic-specific task areas but form an important part of the German mathematical landscape, in particular with the initiative for a DFG priority program whose applicants co-organized the event. A big crowd of over sixty participants spent two days in Leipzig discussing automated recognition of Ramanujan identities with Peter Paule, machine-learned Hodge numbers with Yang He, and Gröbner bases for locating photographs of dragons with Kathlén Kohn. Michael Joswig led a panel in focusing on the future of computers in discrete mathematics research and the importance of human intuition. Antony Della Vecchia presented file formats for mathematical databases, and Tobias Boege encouraged the audience to reproduce published results in a hands-on session with participants finding pitfalls even in the most simple exercise. In the final hour, young researchers took the stage to present their areas of expertise, the research data they handle, and their take-away messages from this workshop: to follow your interests, keep communicating with your peers and scientists from other disciplines, and make sure your research outputs are FAIR for yourself and others. This program made for a very lively atmosphere in the lecture hall and was complemented by involving discussions on mathematicians as pattern-recognition machines, how mathematics might be a bit late to the party in terms of software, whether humans will be obsolete soon, and the hierarchy of difficulty in mathematical problems.

Conference on Research Data Infrastructure

The Conference will take place September 12th – 14th, 2023, in Karlsruhe (Germany). There will be disciplinary tracks and cross-disciplinary tracks.

Abstract submissions deadline: April 21, 2023

More information:

- in English

IceCube - Neutrinos in Deep Ice

This code competition aims to identify which direction neutrinos detected by the IceCube neutrino observatory came from. PUNCH4NFDI is focused on particle, astro-, astroparticle, hadron, and nuclear physics, and is supporting this ML challenge.

Deadline: April 23, 2023

More Information:

- in English

Open Science Radio

Get an overview of all NFDI consortia funded to date, and gain an insight into the development of the NFDI, its organizational structure, and goals in the 2-hour Open Science Radio episode interviewing Prof. Dr. York Sure-Vetter, the current director of the NFDI.

Listen:

- in English

The DMV, in cooperation with the KIT library, maintains a free self-study course on good scientific practice in mathematics, including notes on the FAIR principles. (Register here to subscribe to the free course.)

Edmund Weitz of the University of Hamburg recorded an entertaining chat about mathematics with ChatGPT (in German).

Remember our interview about accessibility with Johan Commelin in the second MaRDI Newsletter? The Xena Project is "an attempt to show young mathematicians that essentially all of the questions which show up in their undergraduate courses in pure mathematics can be turned into levels of a computer game called Lean". It has published a blog post highlighting very advanced maths, which can now be understood using the interactive theorem prover Lean Johan told us about.

On March 14, the International Day of Mathematics was celebrated worldwide. You can relive the celebration through the live blog, which also includes two video sessions with short talks for a general audience—one with guest mathematicians and one with the 2022 Fields Medal laureates. This year, the community was asked to create Comics. Explore the featured gallery and a map with all of the mathematical comic submissions worldwide.

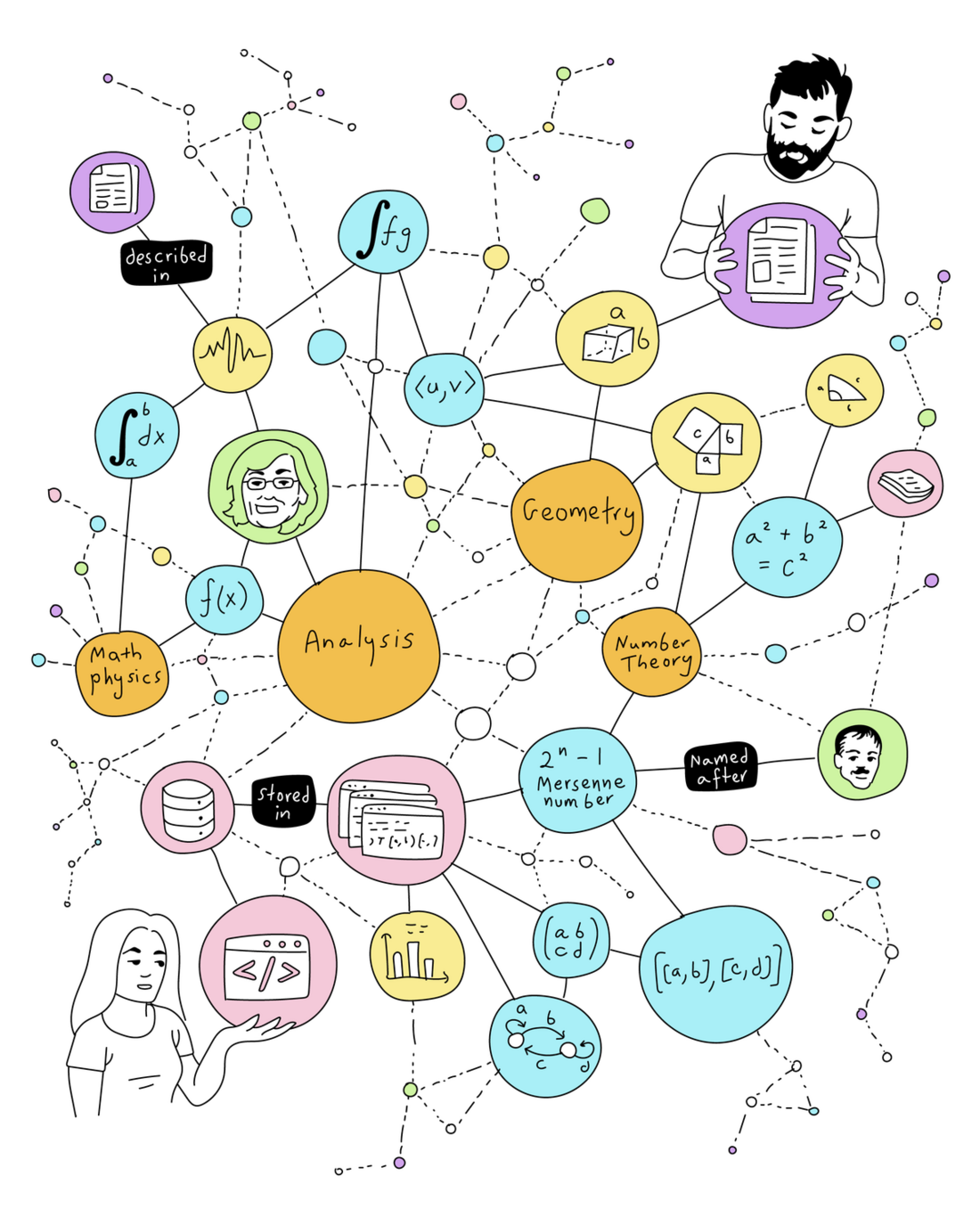

Welcome to the fifth issue of the MaRDI Newsletter on mathematical research data. In the first four issues, we focused on the FAIR principles. Now we move to a topic, which makes use of FAIR data and also implements the FAIR principles in data infrastructures. So without further ado, let me introduce you to the ultimate use case of FAIR data: Knowledge Graphs.

by Ariel Cotton, licensed under CC BY-SA 4.0.

Knowledge graphs are very natural and represent information similar to how we humans think. They come in handy when you want to avoid redundancy in storing data (as it may happen quite often with tabular methods), and also for complex dataset queries.

This newsletter issue offers some insight into the structure of knowledge, examples of knowledge graphs, including some specific to MaRDI, an interview with a knowledge graph expert, as well as news and announcements related to research data.

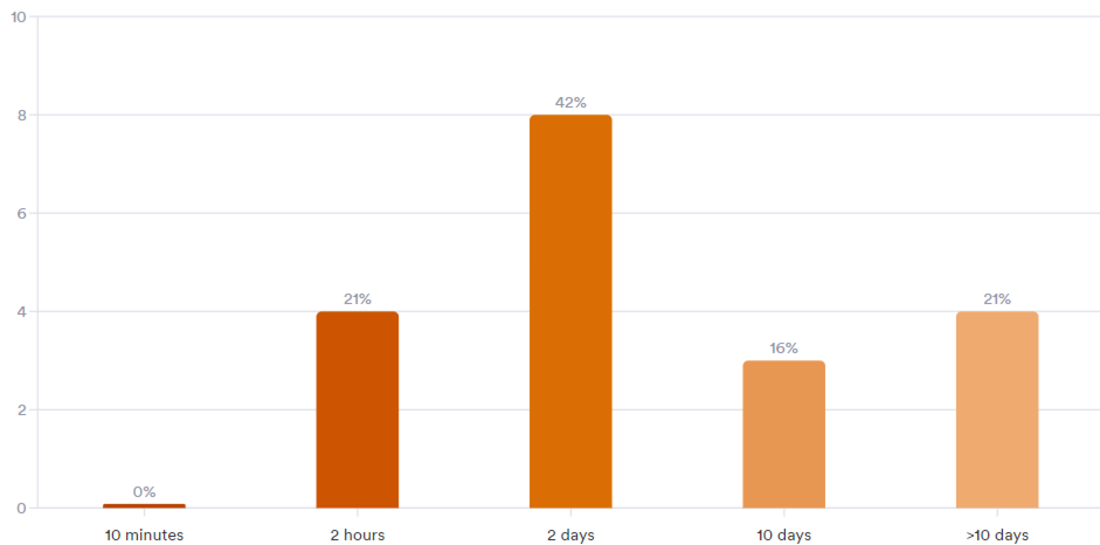

In the last issue, we asked how long it would take you to find and understand your own research data. These are the results:

Now we ask you for specific challenges when searching for mathematical data. You may choose from the multiple-choice options or enter something else you faced.

Click to enter your challenges!

You will be taken to the results page automatically, after submitting your answer. Additionally, the current results can be accessed here.

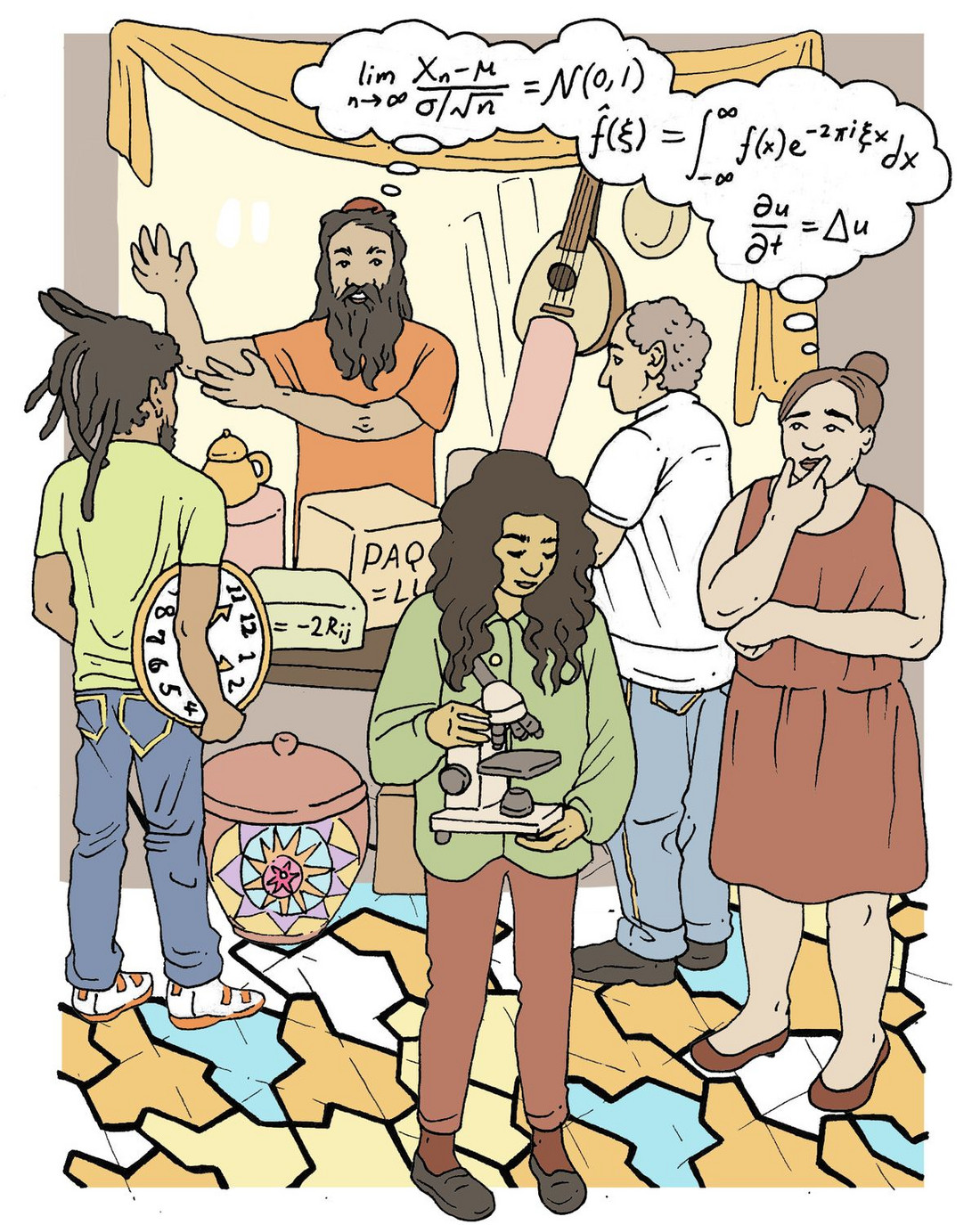

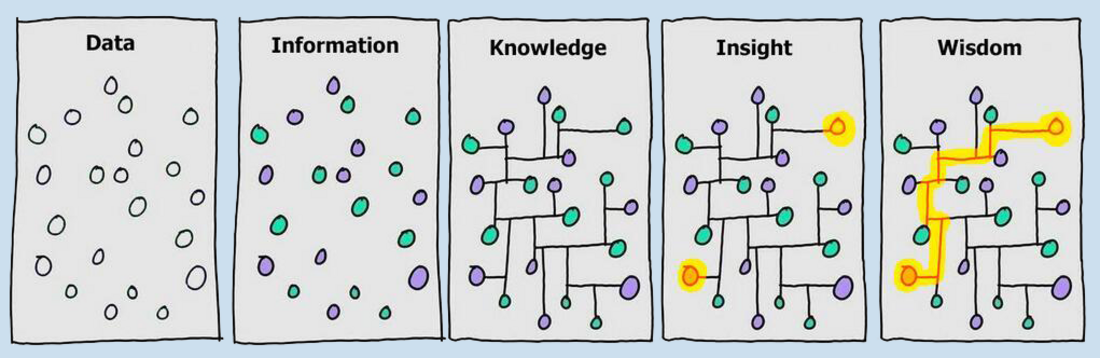

The knowledge ladder

We are not sure exactly how humans store knowledge in their brains, but we certainly pack concepts into units, and then relate those conceptual units together. For example, if asked to list animals, nobody remembers an alphabetical list (unless you explicitly train yourself to remember such a list). Instead, you start the list with something familiar, like a dog, then you recall that dog is a pet animal, and then you list other pet animals like cat or canary. Then you recall that canary is a bird, and then you list other birds, like eagle, falcon, owl… when you run out of birds, you recall that birds fly in the air, which is one environment medium. Another environment medium is water, and this prompts you to start listing fishes and sea animals. This suggests that we can represent human knowledge in the form of a mathematical graph: concepts are nodes, and relationships are edges. This structure is also ingrained in language, which is the way humans communicate and store knowledge. All languages in the world, across all cultures, have nouns, verbs, or adjectives, and establish relationships through sentences. Almost every language organizes sentences in a subject-verb-object pattern (or any permutations: SVO, SOV, VSO, etc). The subject and the object are typically nouns or pronouns, the verb is often a relationship. A sentence like “my mother is a teacher” encodes the following knowledge: the person “my mother” is a node 1, “teacher” is a node 2, and “has as a job” is a relational edge from node 1 to node 2. Also, there is a node 3, the person “me”, and a relationship “is the mother of” from node 1 to node 3, (which implies a reciprocal relationship “is a child of” from node 3 to node 1).

David Somerville / Hugh McLeod

informationversusknowledge-blog.tumblr.com/

On this construction, we can expect to have an abstract representation of human knowledge that we can store, retrieve, and search with a computer. But not all data automatically gives knowledge, and raw knowledge is not all you may need to solve a problem. This distinction is sometimes referred as “knowledge ladder”, although terminology has not been universally agreed upon. In this ladder, data is the lowest level of information, data are raw input values that we have collected with our senses, or with a sensor device. Information is data tagged with meaning; I am a person, that person is called Mary, teaching is a job, this thing I see is a dog, this list of numbers are daily temperatures in Honolulu. Knowledge is achieved when we find relationships between bits of information; Mary is my mother, Mary’s job is teacher, these animals live together and compete for food; pressure, temperature, and volume in a gas are related by the gas law PV=nRT. Insight is discerning. It is singling out the information that is useful for your purpose from the rest, it is finding seemingly unrelated concepts that behave alike. Finally, wisdom is understanding the connections between concepts. It is the ability to explain step by step how concept A relates to concept B. This ladder is illustrated in the image above. From this point of view, “research” means to know and understand all portions of the human knowledge that falls into or close to your domain, and then enlarging the graph with more nodes and edges, for which you need both insight and wisdom.

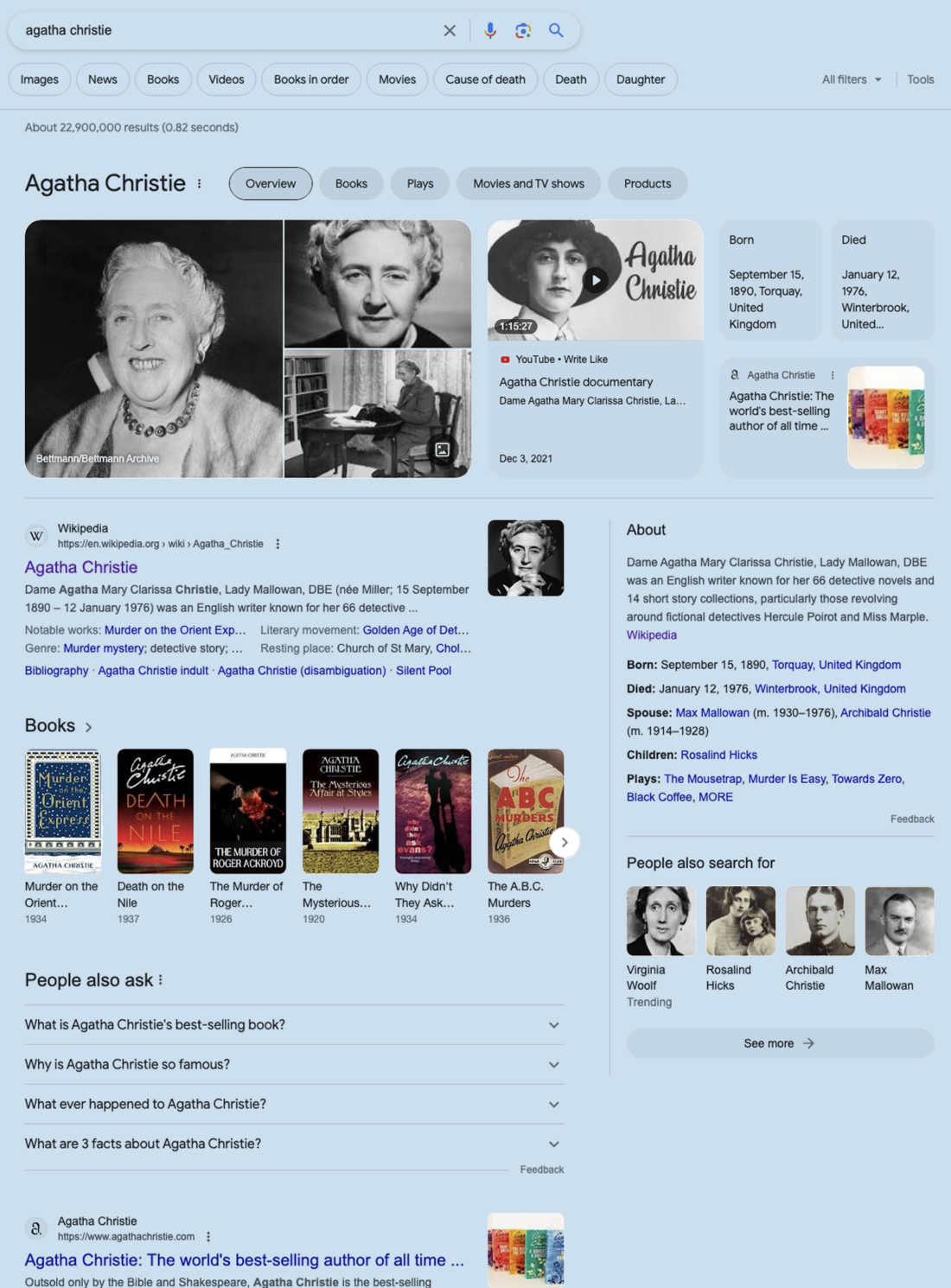

The advent of knowledge graphs

Knowledge graphs (KG) as a theoretical construction have been discussed in information theory, linguistics and philosophy for at least five decades, but it is only in this century that computers allowed us to implement algorithms and data retrieval at a practical and massive scale. Google introduced its own knowledge graph in 2012, you may be familiar with it. When you look up in Google some person, some place, etc, there is a small box to the right that displays some key information such as birthdate and achievements for a person, opening times for a shop, etc. This information is not a snippet from a website, it is information collected from many sources and packed into a node of a graph. Then those nodes are linked together by some affinity relationship. For instance, if you look up “Agatha Christie”, you will see an “infobox” with her birthdate, deathdate, short description extracted from Wikipedia, a photograph… And also a list of “People also search for” that will bring you to her family relatives such as Archibald Christie, or to other British authors, such as Virginia Woolf.

But probably the biggest effort to bring all human knowledge into structured data is Wikidata. Wikidata is a sister project of Wikipedia. Wikipedia aims to gather all human knowledge in the form of encyclopedic articles, that is, into non-structured human-readable data. Wikidata, by contrast, is a knowledge graph. It is a directed labeled graph, made of triples of the form subject (node) - predicate (edge) - object (node). The nodes and edges are labeled, actually, they contain a whole list of attributes.

The Wikidata graph is not designed to be used directly by humans. It is designed to retrieve information automatically, to be a “base of truth” that can be relied on. For instance, it can check automatically that all languages of Wikipedia state basic facts correctly (birthplace, list of authored books…), and can be used by external services (such as Google and other search engines or voice assistants) to offer correct and verifiable answers to queries.

In practice, nodes are pages, for instance, this one for Agatha Christie. Inside the page, it lists some “statements”, which are the labeled edges to other nodes. For example, Agatha Christie is an instance of a human, her native language is English, and her field of work is crime novel, detective literature, and others. If we compare that page with the Agatha Christie entry in the English Wikipedia, clearly the latter contains more information, and the Wikidata page is less convenient for a human to read. Potentially, all the ideas described with English sentences in Wikipedia could be represented by relationships in the Wikidata graph, but this task is tedious and difficult for a human, and AI systems are not yet sufficiently developed to make this conversion automatically.

In the backend, Wikidata is stored in relational SQL databases (the same Mediawiki software as used in Wikipedia), but the graph model is that of triples subject-predicate-object as defined in the web standard RDF (Resource Description Framework), This graph structure can be explored and queried with the language SPARQL (Simple Protocol And RDF Query Language). Note that usually, we use the verb “query” as opposed to “search” when we want to retrieve information from a graph, database, or other structured sources of information.

Thus, one can access the Wikidata information in several ways. First, one can use the web interface to access single nodes. The web interface has a search function that allows one to look up pages (nodes) that contain a certain search string. However, it is much more insightful to get information that takes advantage of the graph structure, that is, querying for nodes that are connected to some topic by a particular predicate (statement), or that have a particular property. For Wikidata, we have two main tools: direct SPARQL queries, and the Scholia plug-in tool.

The web and API at query.wikidata.org allows to send queries in SPARQL language. This is the most powerful search, you can browse the examples in that site. The output can be a list, a map, a graph, etc. There is a query builder help function, but essentially it requires some familiarity with SPARQL language. On the other hand, Scholia is a plug-in tool that helps querying and visualizing the Wikidata graph. For instance, searching for “covid-19” via Scholia, it will offer a graph of related topics, a list of authors and recent publications on the topic, organizations, etc., in different visual forms.

Knowledge graphs, artificial intelligence, and mathematics

Knowledge graphs are a hot research area in connection with Artificial Intelligence. On the one hand, there is the challenge of creating a KG from a natural language text (for instance, in English). While detecting grammar and syntax rules (subject, verb, object) is relatively doable, creating a knowledge graph requires encoding the semantics, that is, the meaning of the sentence. In the example of a few paragraphs above, “my mother is a teacher”, to extract the semantics we need the context of who is “me” (who is saying the sentence), we need to check if we already know the person “my mother” (her name, some kind of identifier), etc. The node for that person can be on a small KG with family or contextual information, while “teacher” can be part of a more general KG of common concepts.

In the case of mathematics, extracting a KG from natural language is a tremendous challenge, unfeasible with today’s techniques. Take a theorem statement: it contains definitions, hypotheses, and conclusions, and each one has a different context of validity (the conclusion is only valid under the hypotheses, but that is what you need to prove). Then imagine that you start your proof by reduction to absurd, so you have several sentences that are valid under the assumption that the hypotheses of the theorem hold, but not the conclusion. At some point, you want to find a contradiction with your previous knowledge, thus proving the theorem. The current knowledge graph paradigm is simply not suitable to follow this type of argumental line. The most similar thing to structured data for theorems and proofs are formal languages in logic, and there are practical implementations such as LEAN Theorem Prover. LEAN is a programming language that can encode symbolic manipulation rules for expressions. A proof by algebraic manipulation of a mathematical expression can therefore be described as a list of manipulations from an original expression (move a term to the other side of the equal sign, raise the second index in this tensor using a metric…). Writing proofs in LEAN can be tedious but it has the benefit of being automatically verifiable by a machine. There is no need for a human referee. Of course, we are still far from an AI checking the validity of a proof without human intervention, or even figuring out proofs to conjectures on its own. On the other hand, a dependency graph of theorems, derived in a logic chain from some axioms, is something that a knowledge graph like the MaRDI KG would be suitable to encode.

In any case, structured knowledge (in the form of KG or other forms, such as databases) is a fundamental piece to providing AI systems with a source of truth. Recent advances in the field of generative AI include the famous conversation bots ChatGPT and other Large Language Models (LLM), which are impressive in the sense that they can generate grammatically correct text, with meaningful sentences while keeping attention to maintaining a conversation. However, these systems are famous for not being able to distinguish truth from falsehood (to be precise, the AI is trained with text data that is assumed to be mostly true, but it cannot make any logical deductions). If we ask an AI for the biography of a nonexistent person, it may simply invent it trying to fulfill the task. If we contradict the AI with a pure fact, it will probably just accept our input despite its previous answer. Currently, conversational AI systems are not capable of rebutting false claims by providing evidence. However, in the likely future, a conversational AI with access to a Knowledge Base (KG, database, or other), will be able to process queries and generate answers in natural language, but also to check for verified facts, and to present relevant information extracted from the knowledge base. An example in this direction is the Wolfram Alpha plug-in for ChatGPT. With some enhanced algorithms to traverse and explore a knowledge graph, we will maybe witness AI systems stepping up from Knowledge to Insight, or further up the ladder.

One of the mottos of MaRDI is “Your Math is Data”. Indeed, from an information theory perspective, all mathematical results (theorems, proofs, formulas, examples, classifications) are data, and some mathematicians also use experimental or computational data (statistical datasets, algorithms, computer code…). MaRDI intends to create the tools, the infrastructure, and the cultural shift to manage and use all research data efficiently. In order to climb up the “knowledge ladder” from Data to Information and Knowledge, the Data needs to be structured, and knowledge graphs are one excellent tool for that goal.

AlgoData

Several initiatives within MaRDI are based on knowledge graphs. A first example is AlgoData (requires MaRDI / ORCID credentials), a knowledge graph of numerical algorithms. In this KG, the main entities (nodes) are algorithms that solve particular problems (such as linear systems of equations or integrate differential equations). Other entities in the graph are supporting information for the algorithms, such as articles, software (code), or benchmarks. For example, we want to encode that algorithm 1 solves problem X, it is described in article Y, it is implemented on software Z, and it scores p points in benchmark W. A use case would be querying for algorithms that solve a particular type of problem, comparing the candidates using certain benchmarks, and retrieving the code to be used (ideally, being interoperable with your system setup).

AlgoData has a well-defined ontology. An ontology (from the Greek, loosely, “study or discourse of the things that exist”) is the set of concepts relevant to your domain. For instance, in an e-commerce site, “article”, “client”, “shopping cart”, or “payment method” are concepts that need to be defined, and included in the implementation of the e-commerce platform. For knowledge graphs, the list would include all types of nodes, and all labels for the edges and other properties. In general-purpose knowledge graphs, such as Wikidata, the ontology is huge, and for practical purposes the user (human or machine) relies on search/suggestion algorithms to identify the property that fits the most to their intention. In contrast, for specific-purpose knowledge graphs, such as AlgoData, a reduced and well-defined ontology is possible, as it simplifies the overall structure and search mechanisms.

The ontology of AlgoData (as of June 2023, under development) is the following:

Classes:

Algorithm, Benchmark, Identifiable, Problem, Publication, Realization, Software.

Object Properties:

analyzes, applies, documents, has component, has subclass, implements, instantiates, invents, is analyzed in, is applied in, is component of, is documented in, is implemented by, is instance of, is invented in, is related to, is solved by, is studied in, is subclass of, is surveyed in, is tested by, is used in, solves, specializedBy, specializes, studies, surveys, tests, uses.

Data Properties:

has category, has identifier.

We can display this ontology as a graph,

Currently, AlgoData implements two search functions: “Simple search” by matching words on the content, and “Graph search” where we query for nodes in the graph satisfying certain conditions in their connections. The main AlgoData page gives a sneak preview of the system (these links are password protected, but MaRDI team members and any researcher with a valid ORCID identifier can access)

A project closely related to AlgoData is the Model Order Reduction Benchmark (MORB) and its Ontology (MORBO). This sub-project focuses on the creation of benchmarks for algorithms solving Model Reduction (a standard technique in mathematical modelization, to reduce the simulation time for large-scale systems) and has its own knowledge graph and ontology, tailored to this problem. More information on the MOR Wiki and the MaRDI TA2 page.

The MaRDI portal and knowledge graph

The main output from the MaRDI project will also be based on a knowledge graph. The MaRDI Portal will be the entry point to all services and resources provided by MaRDI. The portal will be backed by the MaRDI knowledge graph, a big knowledge graph scoped to all mathematical research data. You can already have a sneak peek to see the work in progress.

The architecture of the MaRDI knowledge graph follows that of Wikidata, and it is compatible with it. In fact, many entries of Wikidata have been imported into the MaRDI KG and vice-versa. The MaRDI knowledge graph will also integrate many other resources from open knowledge, thus leveraging from many projects. A non-exhaustive list would include:

- The MaRDI AlgoData knowledge graph described above.

- Other MaRDI knowledge graphs, such as the MORWiki or the graph of Workflows with other disciplines.

- The zbMATH Open repository of reviews of mathematical publications.

- The swMATH Open database of mathematical software

- The NIST Digital Library of Mathematical Functions (DLMF).

- The CRAN repository of R packages.

- Mathematical publications in arXiv.

- Mathematical publications in Zenodo.

- The OpenML platform of Machine Learning projects.

- Mathematical entries from Wikidata.

- Entries added manually from users.

The MaRDI Portal does not intend to replace any of those projects, but to link all those openly available resources together in a big knowledge graph of greater scope. As of June 2023, the MaRDI KG has about 10 million triples (subject-predicate-object as in the RDF format). As with Wikidata, the ontology is too big to be listed, and it is described within the graph itself (e.g. the property P2 is the identifier for functions from the DLMF database).

Let us see some examples of entries in the MaRDI KG. A typical entry node in the MaRDI KG (in this example, the program ggplot2) is very similar to a Wikidata entry. This page is a human-friendly interface, but we can also get the same information in machine-readable formats such as RDF or JSON.

For the end user, probably it is more useful to query the graph for connections. As with Wikidata, we can query the MaRDI knowledge graph directly in SPARQL. It is a work-in-progress to enable the Scholia plug-in to work with the MaRDI KG. Currently, the beta MaRDI-Scholia queries against Wikidata.

Some queries that are available in the MaRDI KG but not on Wikidata are for instance queries to formulas in DLMF: here formulas that use the gamma function, or formulas that contain sine and tangent functions (the corpus of the database is still small, but it illustrates the possibilities). Wikidata can nevertheless query for symbols in formulas too.

The MaRDI KG is still in an early stage of development, and not ready for public use (all the examples cited are illustrative only). Once the KG begins to grow, mostly from open knowledge sources, the MaRDI team will improve it with some “knowledge building” techniques.

One such technique is the automated retrieval of structured information. For instance, the bibliographic references in an article are structured information, since they follow one of a few formats, and there are standards (bibTeX, Zb/MR number, …).

Another technique is link inference. This addresses the problem of low connectivity in graphs made by importing sub-graphs from multiple third-party sources, which may result in very few links between the sub-graphs. For instance, an article citing some references and a GitHub repository citing the same references are likely talking about the same topic. These inferences can then be reviewed by a human if necessary.

Another enhancement would be to improve search in natural language so that more complex queries can be made in plain English without the need to use SPARQL language.

The latest developments of the MaRDI Portal and its knowledge graph will be presented at a mini-symposium at the forthcoming DMV annual meeting in Ilmenau in September 2023.

- Knowledge ladder: Steps on which information can be classified, from the rawest to the more structured and useful. Depending on the authors, these steps can be enumerated as Data, Information, Knowledge, Insight, Wisdom.

- Data: raw values collected from measurements.

- Information: Data tagged with its meaning.

- Knowledge: Pieces of information connected together with causal or other relationships.

- Knowledge base: A set of resources (databases, dictionaries…) that represent Knowledge (as in the previous definition).

- Knowledge graph: A knowledge base organized in the form of a mathematical graph.

- Insight: Ability to identify relevant information from a knowledge base.

- Wisdom: Ability to find (or create) connections between information points, using existing or new knowledge relationships.

- Ontology: Set of all the terms and relationships relevant to describe your domain of study. In a knowledge graph, the types of nodes and edges that exist, with all their possible labels.

- RDF (Resource Description Framework): A web standard to describe graphs as triples (subject - predicate - object).

- SPARQL (Simple Protocol And RDF Query Language): A language to send queries (information retrieval/manipulation requests) to graphs in RDF format.

- Wikipedia: a multi-language online encyclopedia based on articles (non-structured human-readable text).

- Wikidata: an all-purpose knowledge graph intended to host data relevant to multiple Wikipedias. As a byproduct, it has become a tool to develop the semantic web, and it acts as a glue between many diverse knowledge graphs.

- Semantic web: a proposed extension of the web in which the content of a website (its meaning, not just the text strings) is machine-readable, to improve search engines and data discovery.

- Mediawiki: the free and open-source software that runs Wikipedia, Wikidata, and also the MaRDI portal and knowledge graph.

- Scholia: A plug-in software for Mediawiki, to enhance visualization of data queries to a knowledge graph

- AlgoData: a knowledge graph for numerical algorithms, part of the MaRDI project.

The video is available under the CC BY 4.0 license. You are free to share and adapt it, when mentioning the author (MaRDI).

In Conversation with Daniel Mietchen

In this episode of Data Dates, Daniel and Tabea talk about knowledge graphs. Touching on the general concept, how it would help you find the proverbial needle and specific challenges that include mathematical structures. In addition, we also hear about the MaRDI knowledge graph and what this brings to mathematicians.

Leibniz MMS Days

The 6th Leibniz MMS Days, organized by the Leibniz Network "Mathematical Modeling and Simulation (MMS)", took place this year from April 17 to 19 in Potsdam at the Leibniz Institute for Agricultural Engineering and Bioeconomics. A small MaRDI faction, consisting of Thomas Koprucki, Burkhard Schmidt, Anieza Maltsi, and Marco Reidelbach made their way to Postdam to participate.

This year's MMS Days placed a special emphasis on "Digital Twins and Data-Driven Simulation," "Computational and Geophysical Fluid Dynamics," and "Computational Material Science," which were covered in individual workshops. There was also a separate session on research data and its reproducibility in which Thomas introduced the MaRDI consortium with its goals and vision, and promoted two important MaRDI services of the future, AlgoData and ModelDB; two knowledge graphs for documenting algorithms and mathematical models. Marco concluded the session by providing insight into the MaRDMO plugin, which links established software in research data management with the different MaRDI services, thus enabling FAIR documentations of interdisciplinary workflows. The presentation of the ModelDB was met with great interest among the participants and was the subject of lively discussions afterwards and in the following days. Some aspects from these discussions have already been considered in the further design of the ModelDB.

In addition to the various presentations, staff members of the institute gave a brief insight into the different fields of activity of the institute, such as the optimal design of packaging and the use of drones in the field, during a guided tour. The highlight of the tour was a visit to the 18-meter wind tunnel, which is used to study flows in and around agricultural facilities. So MaRDI actually got to know its first cowshed, albeit in miniature.

MaRDI RDM Barcamp

MaRDI, supported by the Bielefeld Center for Data Science (BiCDaS) and the Competence Center for Research Data at Bielefeld University, will host a Barcamp on research-data management in mathematics on July 4th, 2023, at the Center for Interdisciplinary Research (ZiF) in Bielefeld.

More information:

- in English

Working group on Knowledge Graphs

The NFDI working group aims to promote the use of knowledge graphs in all NFDI consortia, to facilitate cross-domain data interlinking and federation following the FAIR principles, and to contribute to the joint development of tools and technologies that enable the transformation of structured and unstructured data into semantically reusable knowledge across different domains. You can sign up to the mailing list of the working group here.

Knowledge graphs in other NFDI consortia can be found for instance at the NFDI4Culture KG (for cultural heritage items) or at the BERD@NFDI KG (for business, economic, and related data items).

More information:

- in English

NFDI-MatWerk Conference

The 1st NFDI-MatWerk Conference to develop a common vision of digital transformation in materials science and engineering will take place from 27 - 29 June 2023 as a hybrid conference. You can still book your ticket for either on-site or online participation (online tickets are even free of charge).

More information:

- in English

Open Science Barcamp

The Barcamp is organized by the Leibniz Strategy Forum Open Science and Wikimedia Deutschland. It is scheduled for 21 September 2023 in Berlin and is open to everybody interested in discussing, learning more about, and sharing experiences on practices in Open Science.

More information:

- in English

- The department of computer science at Stanford University offers this graduate-level research seminar, which includes lectures on knowledge graph topics (e.g., data models, creation, inference, access) and invited lectures from prominent researchers and industry practitioners.

It is available as a 73-page pdf document, divided into chapters:

https://web.stanford.edu/~vinayc/kg/notes/KG_Notes_v1.pdf

and additionally as video playlist:

https://www.youtube.com/playlist?list=PLDhh0lALedc7LC_5wpi5gDnPRnu1GSyRG - Video lecture on knowledge graphs by Prof. Dr. Harald Sack. It covers the topics of basic graph theory, centrality measures, and the importance of a node.

https://www.youtube.com/watch?v=TFT6siFBJkQ The Working Group (WG) Research Ethics of the German Data Forum (RatSWD) has set up the internet portal “Best Practice for Research Ethics”. It bundles information on the topic of research ethics and makes them accessible.

https://www.konsortswd.de/en/ratswd/best-practices-research-ethics/

Welcome back to the Newsletter on mathematical research data—this time, we are discussing a topic that is very much at the core of our interest and that of our previous articles: what is mathematical research data? And what makes it special?

Our very first newsletter delved into a brief definition and a few examples of mathematical research data. To quickly recap, research data are all the digital and analog objects you handle when doing research: this includes articles and books, as well as code, models, and pictures. This time, we zoom into these objects, highlight their properties, needs, and challenges (check out the article "Is there Math Data out there?" in the next section of this newsletter), and explore what sets them apart from research data in other scientific disciplines. We also report from workshops and lectures where we discussed similar questions, present an interview with Günter Ziegler, and invite you to events to learn more.

by Ariel Cotton, licensed under CC BY-SA 4.0.

We start off with a fun survey. It is again just one multiple choice question. This time, we created a decision tree, which will guide you to answer the question:

What type of mathematician are you?

You will be taken to the results page automatically, after submitting your answer. Additionally, the current results can be accessed here.

The decision tree is available as a poster for download, licensed under CC BY 4.0.

Is there Math Data out there?

“Mathematics is the queen and servant of sciences”, according to a quote by Carl F. Gauss. This opinion of Gauss can be a source of philosophical discussions. Is Mathematics even a science? Why does it play a special role? Connecting these questions to our concerns, what is the relationship between research data and these philosophical questions? We cannot arrive at a conclusion in this short article, but it is a good starting point to discuss the mindset (the philosophy, if you wish) that should be adopted regarding research data in mathematical science.

A wide agreement is that a science is any form of study that follows the scientific method: observation, formulation of hypotheses, experimental verification, extraction of conclusions, and back to observation. In most sciences (natural sciences and, to a great extent, also social sciences), observation requires gathering data from nature in the form of empirical records. In contrast, in pure mathematics observations can be made simply by reflection on known theory and logic. In natural sciences, nature is the ultimate judge of the validity or invalidity of a theory. This experimental verification also requires gathering research data in the form of empirical records that support or refute a hypothesis. In contrast, in mathematics, experimental verification is substituted by formal proofs. Such characteristics have prompted some philosophers to claim that mathematics is not really a science, but a meta-science because it does not rely on empirical data. More pragmatically, it can tempt some researchers and mathematicians to say that (at least, pure) mathematics does not use research data. But as you will guess, in the Mathematical Research Data Initiative (MaRDI), we advocate for quite the opposite view.

Firstly, some parts of mathematics do use experimental data extensively. Statistics (and probability) is the branch of mathematics for analyzing large collections of empirical records. Numerical methods are practical tools to perform computations in experimental data. This is the case for pure mathematics as well, where we can build lists of records (prime numbers, polytopes, groups…) that are somewhat experimental.

Secondly, research data are not only empirical records. Data are any raw piece of information upon which we can build knowledge (we discussed the difference between data, information, and knowledge in the previous newsletter). When we talk about research data, we mean any piece of information that researchers can use to build new knowledge in the scientific domain in question, mathematics in this case. As such, articles and books are pieces of data. More precisely, theorems, proofs, formulas, and explanations are individual pieces of data. They have traditionally been bundled into articles and books, and stored in paper, but nowadays are largely available in digital form and accessible through computerized means.

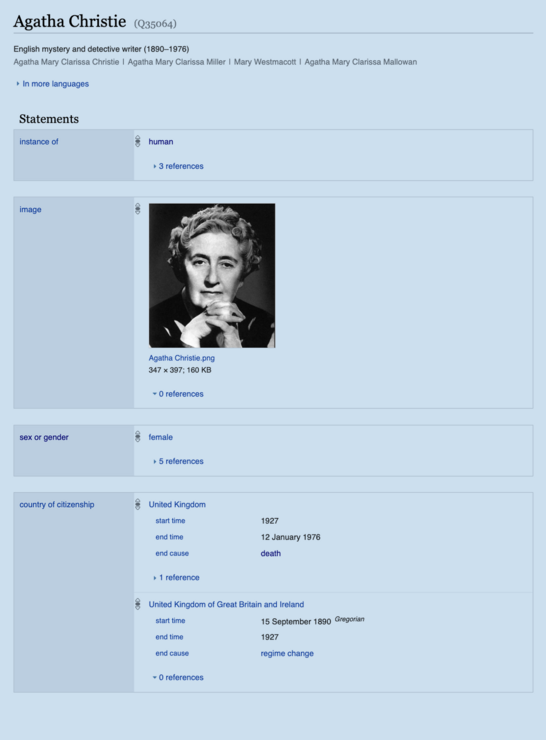

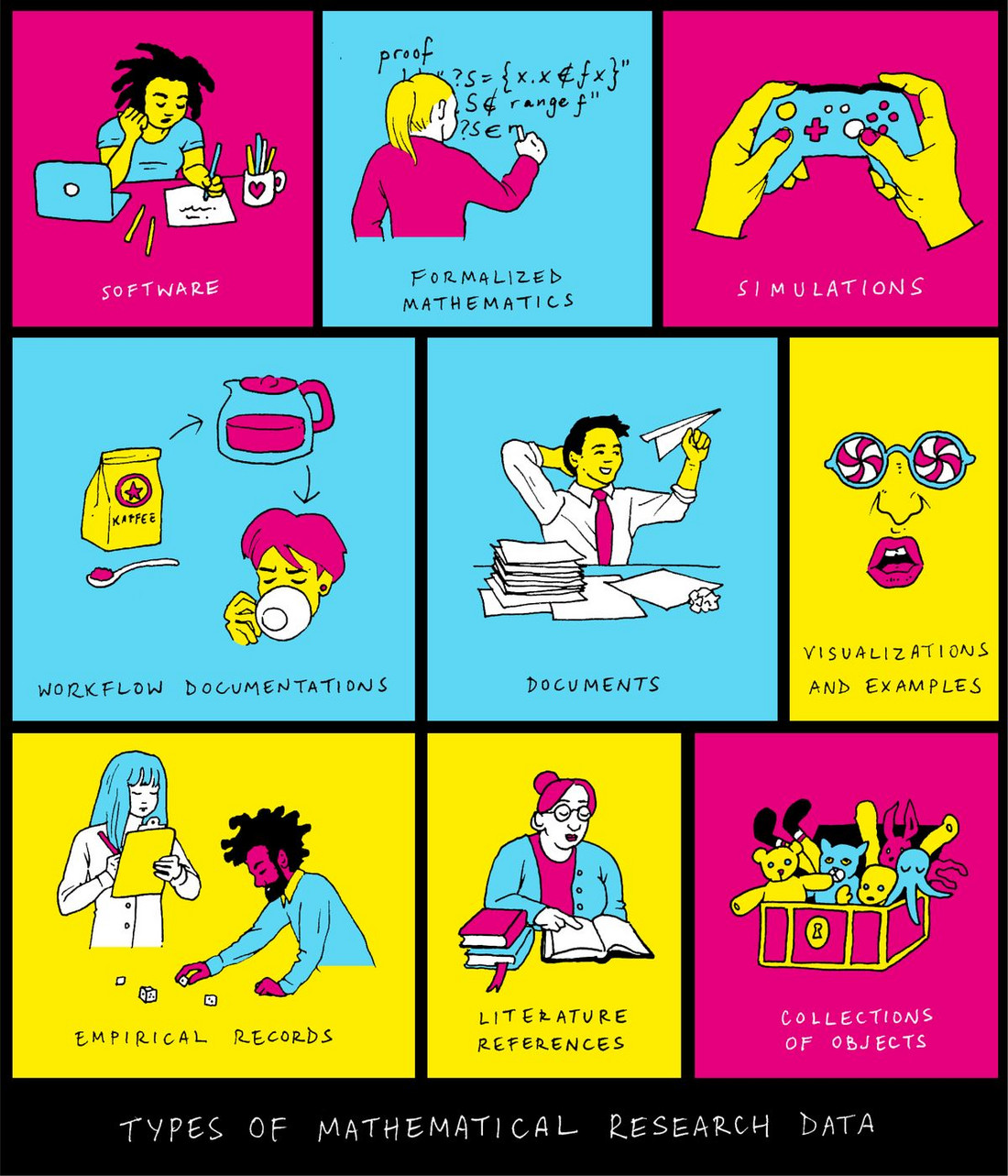

Types of data

In modern mathematical research, we can find many types of data:

Documents (articles, books) and their constituent parts (theorems, proofs, formulas…) are data. Treating mathematical texts as data (and not only as mere containers where one deposits ideas in written form) recognizes that mathematical texts deserve the same treatment as other forms of structured data. In particular, FAIR principles and data management plans also apply to texts.

Literature references are data. Although bibliographic references are part of mathematical documents, we mention them separately because references are structured data. There is a defined set of fields, (such as author, title, publisher…), there are standard formats (e.g. bibTeX), and there are databases of mathematical references (e.g. zbMATH, MathSciNet,...). This makes bibliographic references one of the most curated type of research data (especially in Mathematics) .

Formalized mathematics is data. Languages that implement formal logic like Coq, HOL, Isabelle, Lean, Mizar, etc, are a structured version of the (unstructured) mathematical texts that we just mentioned. They contain proofs verifiable by software and are playing an increasingly vital role in mathematics. Data curation is essential to keep those formalizations useful and bound to their human-readable counterparts.

Software is data. From small scripts that help in a particular problem to wide libraries that integrate into larger frameworks (Sage, Mathematica, MATLAB…). Notebooks (Jupyter,...) are a form of research data that mix text explanations and interactive prompts, so they need to be handled as both documents and software.

Collections of objects are data. Classifications play a major role in mathematics. Either gathered by hand or produced algorithmically, the result can be a pivotal point on which many other works will derive from. Although this output result of a classification can have more applications than the process to arrive at it, it is essential that both input algorithm (or manual process) and the output classification are clearly documented, so that the classification can be verified and reproduced independently, apart from being reused in further projects.

Visualizations and examples are data. Examples and visual realizations of mathematical objects (including images, animations, and other types of graphics) can be very intricate and have an enormous value for understanding and developing a theory. Although examples and visualizations can be omitted in more spartan literature, if provided, they deserve a full research data curation as other research data essential to logical proofs.

Empirical records are data. Of course, raw collection of natural information, intended to be processed to extract knowledge of the data itself, or from the statistical method, are data that need special tools to handle. This applies to statistical databases, but also to machine learning models that require vast amounts of training data.

Simulations are data. Simulations are lists of records not measured from the outside world, but generated from a program. This is usually a representation of a state of a system, including possibly some discretizations and simplifications of reality in the modeling process. As with collections, this output simulation data is as necessary as the input source code that generates it. Simulation data is what allows us to extract conclusions, whereas the reproducibility verification requires that the processing input-to-output be performed by a third party, allowing the recognition of flaws or errors in either the input or the output, or allowing for the rerun of the simulation with different parameters.

Workflow documentations are data. More general than simulations, workflows involve several steps of data acquisition, data processing, data analysis, and extraction of conclusions in many scientific researches. An overview of the process is in itself a valuable piece of data, as it gives insights into the interplay of the different parts. A numerical algorithm can be individually robust and performant, but it may not be the best fit for the task at hand. We can only spot such issues when we have a good overview of the entire process.

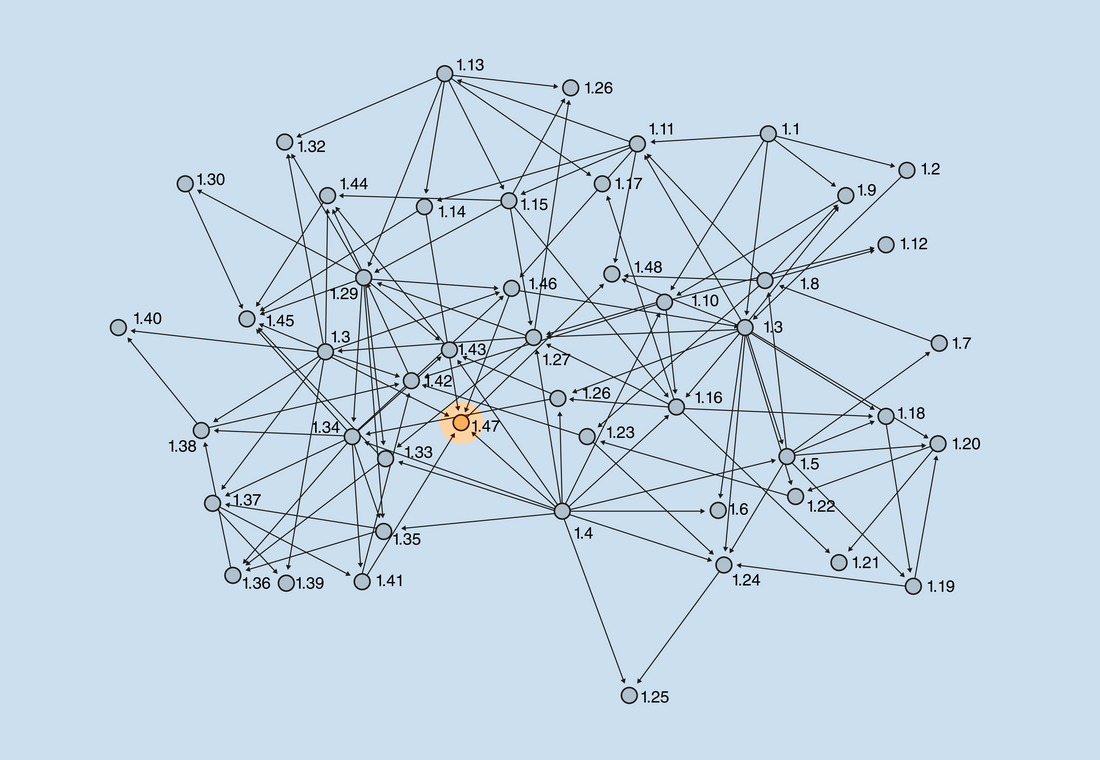

The building of mathematics

One key difference between mathematics and other sciences is the existence of proofs. Once a result is proven, it is true forever, as it cannot be overruled by new evidence. The Pythagorean theorem, for instance, is today as valid and useful as it was in the times of Pythagoras (or even in the earlier times of ancient Babylonians and Egyptians, who knew and used it. However, the Greeks invented the concept of proof, turning mathematics from a practice into a science). The Book of Elements by Euclid, written circa 300 BC, one of the most relevant books in the history of mathematics and mankind, perfectly represents the idea that mathematics is a building, or a network, in which each block is built on top of others, in a chain starting with some predetermined axioms. The image shows the dependency graph of propositions in Book I of the Elements.

Dependency graph of propositions in Book I of Euclid’s Elements (source). Proposition I.47 is the Pythagorean theorem.

Imagine now that we extend the above graph to include all propositions and theorems from all mathematical literature up to the current state of research. That huge graph would have millions of theorems and dependency connections, and will be futile to draw on paper. This graph does not exist yet physically or virtually except as an abstract concept. Parts of this all-mathematics graph are stored in the brains of some mathematicians, or in literature as texts, formulas, and diagrams. The breakthrough of our times is that it is conceivable to materialize this graph with today’s technology, in the form of a knowledge graph similar to those being developed at MaRDI or Wikidata. The benefits of having such a graph in a computer system are many: we will be able to find any known theorem that applies to our problems, access the fundamental blocks of literature where those results were established, find and verify logical connections in complex proofs, facilitating a panoramic view of mathematics and its different areas.

The crucial point is that to succeed in such an endeavor, we must realize that mathematical knowledge is composed of pieces of data, that require FAIR and complex data management and a particular infrastructure to handle data at this scale. Although it is not completely out of MaRDI’s scope, MaRDI itself does not have a goal of creating a knowledge graph of all mathematical theorems but instead focuses on the research data management required by today’s researchers. The most advanced project aiming to fulfill this all-mathematics graph is probably within the LEAN community (see also our interview with Johan Commelin).

Mathematics as a tool

The “special role” of mathematics amongst sciences comes from the role of tool that it plays in any other science, to the point that a science is not considered mature enough until it has a mathematical formalization. The fact that mathematics can be used as the tool for doing science is the so-called “unreasonable effectiveness of mathematics in the natural sciences”. But once this role of mathematics as a tool is accepted, we must admit that, in theory, it is a very reliable tool. It is so, foremost, because of the logical building process that we described above. A proven theorem will not fail unexpectedly, the rules of logic will not cease to exist tomorrow. But in practice, relying on tools that someone else developed requires, first, that one can trust the tool to execute its intended goal; and second, that one can learn how to use the tool effectively. This entails responsibility from mathematics as a science and from mathematicians as a community with respect to other sciences and researchers.

As happens with physical tools, a craftsman must know their tools well in order to use them efficiently. But also any modern toolmaker must state clearly the technical characteristics of the tool, the intended use, the safety precautions, its quality standards and regulations, etc. In our analogy, mathematicians must take care of impeccable preparation of the results they produce, especially when talking about algorithms and methods that will probably be applied by researchers in other fields of science.

Think of the calculus used in quantitative finance, statistical hypothesis tests to analyze data in medicine, or computers tracking the exact location of spaceships. If mathematicians did not get their derivatives and integration right, these methods will not provide reliable results, leading to wrong conclusions, often even putting people’s lives in danger. It is of utmost importance to be able to fully trust at least the theoretical basis, especially since applied science has to deal with rounding errors, components of nature that were not integrated into the original model, and the possibility of human failure. This requires a verifiability of the results.

Concerning the mastering of the use of a tool, mathematical production must take into account its future reusability as tools for other scientists. This means appropriate documentation, using appropriate standards for interoperability with existing tools, using legal licenses that allow unencumbered reusability, and in general following some form of agreed good practices of the community that can help as guidelines for the research practice.

Modern science in the age of information and computation depends entirely on research data, but different fields have adapted their methods and practices with uneven success. Mathematics is not especially well placed in terms of managing research data and software in comparison to other fields.

Software development, especially in the open source community, has been facing data management problems for decades, meaning that some of the solutions are currently standard practices in the industry. For instance, version control (with git as a de-facto standard tool) is a basic practice to track changes and improvements to source code (could be any document or any data). If we couple the version control with a public repository (GitHub, GitLab…), we get a reliable method of publishing software and working collaboratively. Once a project has many contributors, one will face merging problems, when different teams develop in different directions. A solution is a continuous integration scheme, with automated tests, that guarantee your modifications will not break other parts of the project if adopted. The amount of security and verification in the industry for any new development in big software projects (think for instance on new Linux kernel releases) is certainly unparalleled in most software projects in the scientific research community (with notable exception efforts like xSDK). This is often excused as research is in its nature experimental (in the sense of untested and unfinished), but academic and theoretical research should not have lower standards than industry research.

The video is available under the CC BY 4.0 license. You are free to share and adapt it, when mentioning the author (MaRDI).

In Conversation with Günter Ziegler

"There's nothing more successful than success" Günter Ziegler says in our latest data date: best practices will be embraced by the community. We talk about what's his combinatorical view on research data, the need for classifications, and the difference between everlasting mathematical results and theories in physics.

Mathematics Meets Data: Highlights from MaRDI's Barcamp

What better way to get researchers to find out that research-data management is their topic than with a Barcamp? That way, every participant can explore their own experiences, questions, and approaches.

On July 4th, MaRDI hosted its first Barcamp on Research-Data Management in Mathematics at Bielefeld University's Center for Interdisciplinary Research. It was a joint effort involving the Bielefeld mathematics faculty, MaRDI, BiCDaS, and the Bielefeld Competence Center for Research Data.

The day began with a casual breakfast, where attendees mingled, discussed expectations, and chatted about questions. A poster showcasing research data types served as a useful conversation starter (find the download link for the poster in the welcome section of this newsletter issue).

Before the session pitches commenced, Lars Kastner and Pedro Costa-Klein delivered brief talks on code reproducibility and best practices for using Docker in the Collaborative Research Center 1456 (Mathematics of the Experiment) in Göttingen, respectively.

The session pitches revealed that the Barcamp had appealed to many young researchers unfamiliar with the topic. To address this, an introductory session on "What is research data?" kicked off the discussions. Meanwhile, those more experienced with research data management discussed ways to engage the mathematical community with the topic.

One of the defining features of a Barcamp is its participant-driven agenda. Attendees had the unique opportunity to shape the discussions and focus on the topics most pertinent to their research and data management needs. This resulted in a diverse set of topics. One session on research data management plans matched experts from the Competence Center and mathematicians to exchange perspectives and requirements. A smaller group's discussions centered on Binderhub, whereas another tackled research data repositories and their adherence to FAIR principles. Additional sessions explored the peculiarities of mathematical research data, the importance of good documentation, and a hands-on session on an online databasethat collects and discusses ideas on FAIR data.

This Barcamp offered the mathematics community an exceptional platform to exchange insights and inquiries regarding research-data management within their discipline.

Teaching research-data management

A survey conducted in the summer of 2021 in German mathematics departments revealed that teaching mathematicians estimate the awareness and knowledge of their students regarding good scientific practice, authorship attributions, the FAIR principles, and research software as too low. Unfortunately, these are classical research-data management (rdm) topics. Motivated by that need and by successful, cross-disciplinary rdm courses at Bielefeld and Leipzig universities, six lectures in research-data management for mathematicians took place in Leipzig in the summer term 2023. To the teacher's knowledge, this was the first of its kind. The large group of attendees came from a variety of career levels including six undergraduate students, two PhD students, two postdocs, and five MaRDIans. This contributed to lively discussions centered around properties and common problems of mathematical research data, metadata standards for papers and the difficulties in deciding appropriate metadata for mathematical results, the scientific method, good scientific practice, and how to write, cite, and document mathematics. Feedback for the course was very good, with students appreciating the interactive atmosphere, the time allocated for questions, and the informal nature of the classes. A one-day course of maths rdm in Magdeburg in October will build on these first successful sessions and discuss questions of reproducibility and repositories, in addition to introductory topics. Lecture notes for both are now in the making. They will be made publicly available for a second installment next summer term for free use and reuse by any mathematician interested in the topic of rdm.

image credit: NFDI-MatWerk

MaRDMO Workshop at the NFDI-MatWerk Conference

The "1st Conference on Digital Transformation in Materials Science and Engineering - NFDI-Matwerk Conference" took place in Siegburg between 26-29.06.2023. With 30 talks, 17 posters, 10 workshops, and 160 participants (on-site and online), the conference provided an ideal setting for the urgently needed transformation in materials science. In addition to status updates from each NFDI-MatWerk task area and various interdisciplinary use cases, the conference initiated collaborations between different NFDI consortia and new community participants, emphasizing their role in shaping the future of NFDI-MatWerk. Several NFDI consortia, namely NFDI4Chem, NFDI4energy, DAPHNE4NFDI, and FAIRmat, also gave keynote presentations, highlighting the need for collaboration.

Marco Reidelbach from TA4 attended the conference on behalf of the MaRDI consortium to present MaRDMO, a plugin for the Research Data Management Organiser (RDMO) for documenting, publishing, and searching interdisciplinary workflows. Though participation was low at the 100-minute demonstration, discussions vital for the further development of MaRDMO ensued. The central point of the discussion was the automation of the documentation process to minimize additional work for researchers, thereby increasing the acceptance of MaRDMO. We also discussed the use of RDMO, which on paper appears to be an ideal interface to all research disciplines, but was completely unknown to the workshop participants. Here, the NFDI in particular is also called upon to take a clear stand. A good two-thirds of the consortia have declared their support for RDMO, while the remaining consortia want to rely on alternatives or are still undecided.

Overall, the NFDI-MatWerk consortium conference showed that the defining infrastructural issues, far from the concrete content, differ little or not at all from the issues in the MaRDI consortium and the other consortia at the conference. The construction of knowledge graphs and the harmonization of ontologies are central problems that require a joint effort and make it necessary to leave one's own comfort zone.

image credit: Heike Fliegl (FIZ)

MaRDI at CoRDI

MaRDI was present at the first Conference on Research Data Infrastructure (CoRDI), held in Karlsruhe between 12 - 14 September 2023. This interdisciplinary event brought all the NFDI consortia together, during which they presented their projects in general and detailed discussions. The conference was a unique opportunity to exchange experiences and ideas amidst a wide range of communities with different needs, but share common challenges and solutions regarding Research Data.

MaRDI presented three talks and two posters. The general conference proceedings are linked in the recommended further reading section at the end of this newsletter issue. We provide links to individual sections here:

Talks:

MaRDI. Building Research Data Infrastructures for Mathematics and the Mathematical Sciences. Renita Danabalan, Michael Hintermüller, Thomas Koprucki, Karsten Tabelow.

MaRDIFlow: A Workflow Framework for Documentation and Integration of FAIR Computational Experiments. Pavan L. Veluvali, Jan Heiland, Peter Benner.

Building Ontologies and Knowledge Graphs for Mathematics and its Applications. Björn Schembera, Frank Wübbeling, Thomas Koprucki, Christine Biedinger, Marco Reidelbach, Burkhard Schmidt, Dominik Göddeke, Jochen Fiedler

Posters:

MaRDMO Plugin. Document and Retrieve Workflows Using the MaRDI Portal. Marco Reidelbach, Eloi Ferrer, Marcus Weber.

Spreading the Love for Mathematical Research Data. Tabea Bacher, Christiane Görgen, Tabea Krause, Andreas Matt, Daniel Ramos, Bianca Violet.

Math Meets Information Specialists, October 09 - 11, 2023, MPI MiS, Leipzig

MaRDI invites information specialists, librarians, data stewards, and mathematicians to discuss mathematical research data, present their own ideas and services, and make new connections in a three-day noon-to-noon workshop with talks, hands-on sessions, and a barcamp. The workshop will be held in German.

More information:

- in German

Data-Driven Materials Informatics, March 4 - May 24, 2024

The aim of this long program at IMSI is to bring together a diverse scientific audience, both between scientific fields (physical sciences, materials sciences, biophysics, etc.) and within mathematics (mathematical modeling, numerical analysis, statistics, data analysis, etc.), to make progress on key questions of materials informatics.

More information:

- in English

RDM with LinkAhead, September 29, 2023, online

At the NFDI4Chem Stammtisch, the research data management software LinkAhead will be introduced. This agile, open-source software toolbox enables professional data management in research where other approaches are too rigid and inflexible. It will make your data findable and reusable.

More information:

NFDI Code of Conduct

The Consortial assembly, comprising the speakers of each consortium, voted on 27 June 2023 to adopt the code of conduct for the NFDI. This Code of Conduct is intended to provide a binding framework for effective collaboration within the NFDI association.

More information:

- in German

- A a generic JSON based file format which is suitable for computations in computer algebra is described in the paper A FAIR File Format for Mathematical Software by Antony Della Vecchia, Michael Joswig, and Benjamin Lorenz. This file format is implemented in the computer algebra system OSCAR, but the paper also indicates how it can be used in a different context.

- To understand our world, we classify things. A famous example is the periodic table of elements, which describes the properties of all known chemical elements and classifies the building blocks we use in physics, chemistry, and biology. In mathematics, and algebraic geometry in particular, there are many instances of similar periodic tables, describing fundamental classification results. In his article, The Periodic Tables of Algebraic Geometry, Pieter Belmans invites you on a tour of some of these results. It appeared within the series 'Snapshots of modern mathematics from Oberwolfach'.

- Play with the educational tool Classified graphs. With this open-source web app you can draw any graph, or select one from a collection, and then compute a few invariants, such as the adjacency determinant. In the Identify mode, you are challenged to find out which of the graphs in the collection is shown as a target. The tool is part of Pieter Belmans's project Classified maths.

- In each episode of the podcast "Mathematical Objects", Katie Steckles and Peter Rowlett chat about some aspect of mathematics using a mathematical object as inspiration. The podcast is also available on YouTube.

- Proceedings of the Conference on Research Data Infrastructure (CoRDI):

https://www.tib-op.org/ojs/index.php/CoRDI/issue/view/12

Welcome to this year's final Newsletter, our seventh issue on math and data! We are happy to present you with troves of information on managing your mathematical research data. Over the past issues, we have discussed the implications of the FAIR acronym for mathematics, how to search for and structure math results using knowledge graphs, and the specialties of mathematical research data. We will close this cycle by raising these questions: What do funding bodies and NFDI members recommend in their research-data management guidelines? How can we practice this in maths? What are MaRDI's recommendations?

For answers, check out our interview with math research-data manager Christoph Lehrenfeld; the keynote article on what to write in a research-data management plan; various reports from meetings where these topics were discussed, especially with a community of librarians; and not to mention, the ever-intriguing list of recommended reading.

Enjoy and seasonal greetings!

As always, we start off with an illustration. This time, it depicts the different research data types in mathematics, as discussed in our previous issue.

Hot tip: Send the illustration to your colleagues and friends as a seasonal greeting.

by Ariel Cotton, licensed under CC BY-SA 4.0.

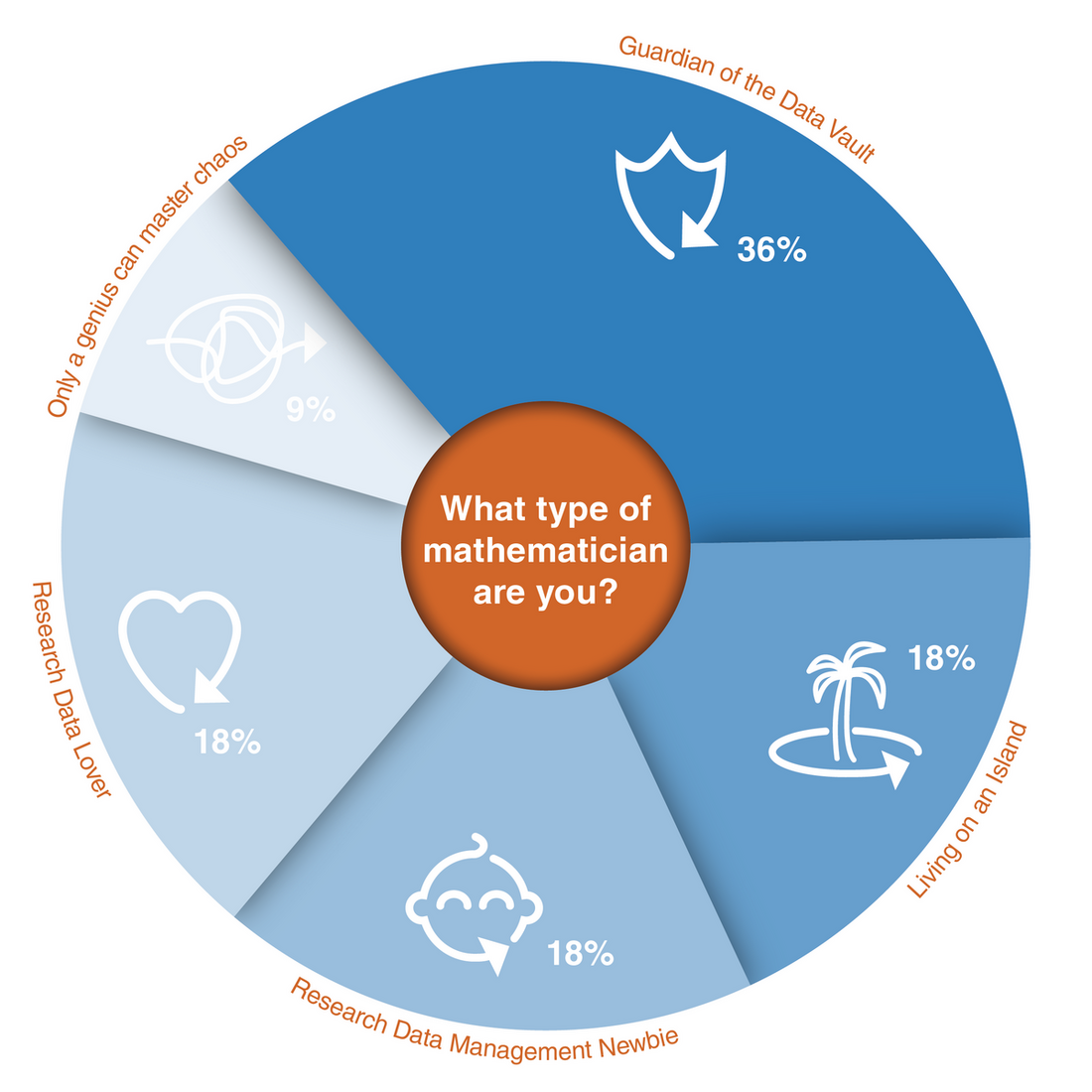

In the previous issue, we asked what type of mathematician you are. All types of mathematicians are represented significantly within our newsletter community. The largest fraction belongs to the Guardians of the Data Vault category. You can also check out this page for more information, including a free poster download.

Here are the results:

Now, back to the current topic - Research Data Management in Mathematics. Have you ever been asked about data handling in a funding proposal? The survey of this newsletter issue deals with:

Data Management: From Theory to Practice

In previous MaRDI newsletter articles, we discussed what is Mathematical Research Data, the guiding principles that define proper, good quality research data (the FAIR principles), and why as a researcher you should care about your data. It is time now to raise the question of how to properly curate your research data in practice.

Research Data Management (RDM) refers to all handling of data related to a research project. It includes a planning phase (written as a formal RDM plan and included in an application for funding agencies), an ongoing data curation and plan revision during the project, and an archival phase at the conclusion of the project.